Through this blog, which later turns into a far fetched idea, I intend to answer some questions; to myself and to others who might be reading.

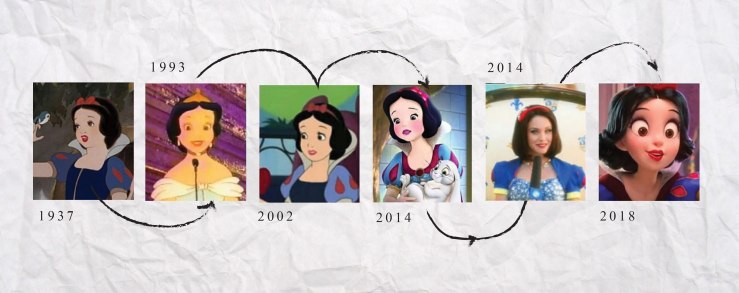

Have you ever wondered how stories like Snow White have passed down generations in countries where English is not the primary language? My mother, grandmother, and great-grandmother, all know who Snow White is. Is there a story that originated in Urdu language and has stood the test of time similarly?

Stories contribute greatly to children’s imaginative worlds and narrative abilities, and there’s no denying that with stories spoken in ‘home-languages’, comes an inevitable relatability due to context specificity.

There’s something I wish I could do about the question posed above. So began the upcoming series of whys, whats, whos, wheres, and hows…

Why Urdu, and not another language?

This section is perhaps more personal. As a Pakistani child, growing up in a foreign country, I had always felt distant from the Urdu language. Although formally learned at school, and frequently spoken at home, I’ve always wondered why I wasn’t equally excited about communicating in my mother tongue, as much as I was with English language. A part of me was satisfied with the understanding that it was perhaps because I lived all of my childhood in a place where the primary language was different. Yet the unsettling discomfort I have with Urdu traveled with me to Pakistan, and into adulthood.

What hindrances do Urdu stories face moving down generations?

Traditionally, in South Asia, the role of the storyteller rests heavily with grandparents who narrate and concoct stories from their own childhood, life experiences, or imagination. But with the breakdown of a traditional family to a nuclear family, that pivotal role has been lost.”

Express Tribune, September 27th, 2011

With continuously evolving lifestyles, we simultaneously have rapidly advancing technology. The young, today, are addicted to strong sensory stimuli: It’s a visual world out there, paired with sound and movement.

Education is imparted through books, Knowledge is imparted through stories.”

Motto of Tell-A-Tale : an online portal for reading and sharing stories by authors. both, young and old.

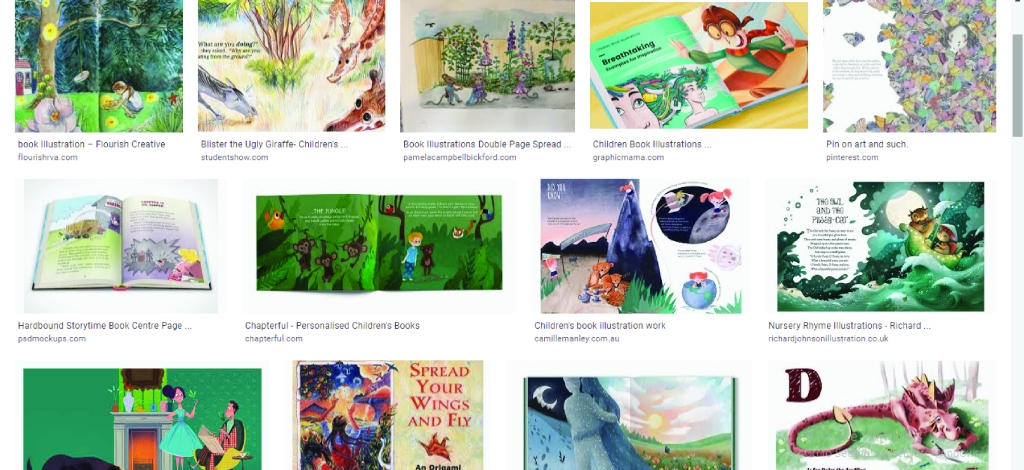

Here’s a demonstration to give you a visual representation of what I’m referring to. I did a simple google image search for “Urdu storybook” and “English storybook”. The results I got are shown below.

Notice anything?

If treasures of Urdu stories are hidden and confined within memories of adults, how does one extract, document and archive them before it’s too late? Where does one begin? Extracting them orally would probably be the first step.

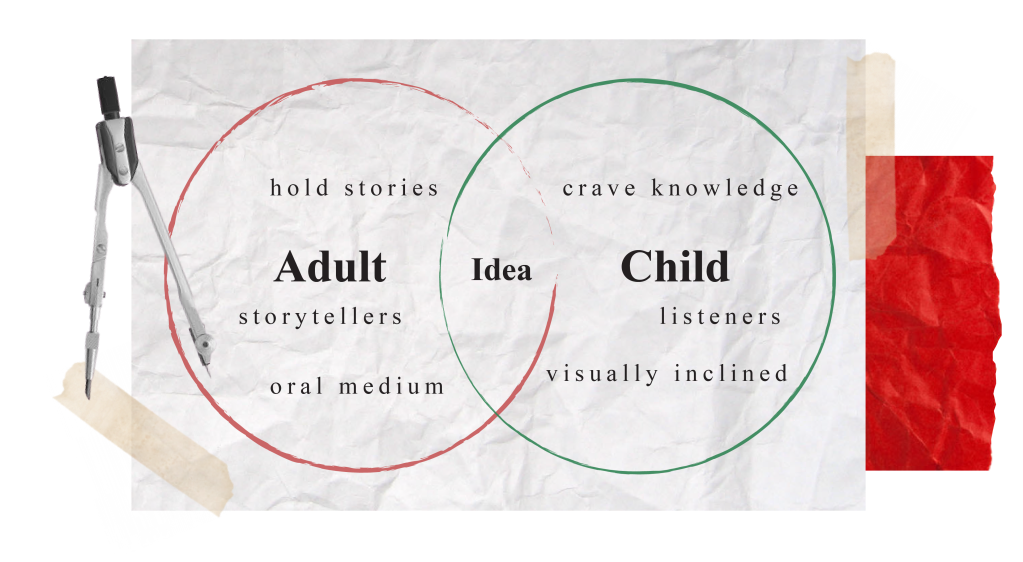

Who is involved in the act of oral storytelling?

“Oral storytelling is the stimulating act of sharing stories, sometimes with improvisation, theatrics or embellishment” . Every culture has its own stories or narratives, which are shared in order to achieve goals ranging from entertainment to education or cultural preservation to instilling moral values. In oral storytelling, the teller and listener of the story must be equally involved, therefore the question is, what can be done to allow the dissemination of a narrative to be exciting for both: making sure the process and outcome cater to and fulfill needs on both ends, in order to habituate this interaction.

Storytelling involves a two-way interaction between a storyteller and listener. The responses of the listener influence the telling of the story. In fact, storytelling emerges from the interaction and cooperative, coordinated efforts of teller and audience.”

The National Storytelling Network

Below are some efforts made towards reviving oral Urdu storytelling in Pakistan:

Left to right:

- Qissa Kahani by British Council Pakistan – an interactive storytelling session where they re-tell Urdu folktales and classics.

- Storykit Program – Musharraf Ali Farooqi Using Storytelling to Teach Children Urdu.

- Kahani Time – reincarnating the long lost tradition of Urdu bedtime stories.

What next?

It is clear that oral storytelling is a significant way to draw out and reveal stories that are at least a generation old. Question is, what makes someone want to tell a story? Oral storytelling as an art form can jolt one’s ability to recollect, rethink, and retell what happened in their life. Fueled by nostalgia and sentimentality, the process of crafting a true experience into a story to be shared can magnify its meaning. This is true even for someone simply recalling something they were once told.

What makes a child want to hear a story? When disseminated information is acquired by a listener, its meaning expands even more. A story has the ability to develop to a point where it becomes part of a listener’s lived experience and inner resource, and children are most susceptible to this phenomenon. Storytelling happens in many ways. Some situations demand informality while others require a different decorum. Some demand certain themes, attitudes, and artistic approaches. Keeping that in mind and considering the developmental stage at which today’s child begins to learn language, I intend to merge oral storytelling with visuals in motion!

Idea!

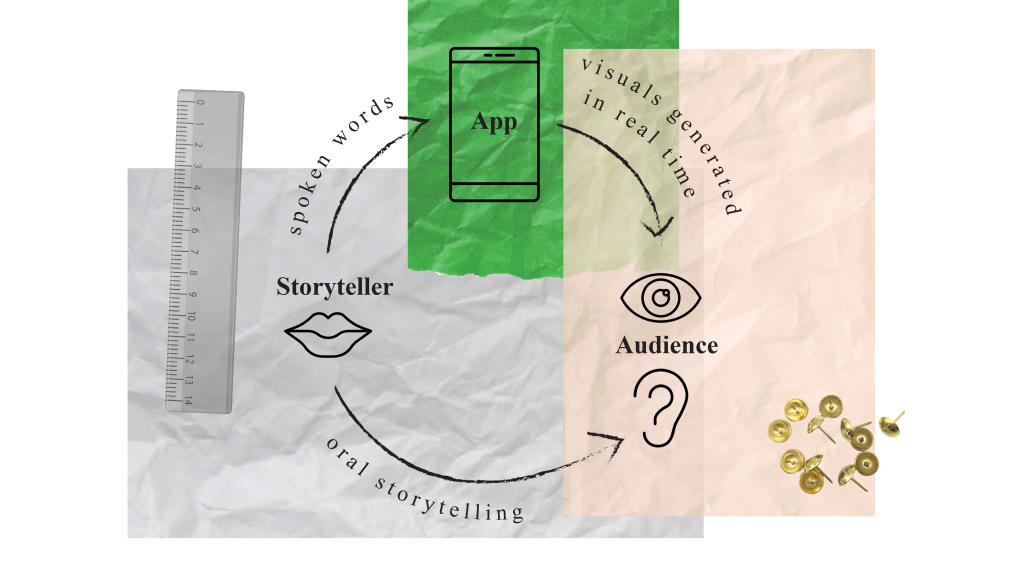

Succinctly put, what if there was an app that gave visuals to the words formed by the storyteller’s mouth in real-time! There’s a lot that needs to be resolved before this is smooth-running, but there’s no doubt about its possibility to exist…eventually. Marrying the joy of delving into memories and experiences to form a narrative in Urdu, and the thrill of listening to stories while also being able to view the plot unfold sounds like a near-perfect solution! Due to limited time, knowledge, and finances, it will be decades before this idea can become a reality. But nothing wrong with a ‘go big or go home’ approach.

What is the medium?

The intimate nature of oral storytelling would only remain so if immediate gadgets are put to use. The astonishing familiarity that children have with mobile phones is an advantage that can be exploited. I believe the already-dying act of oral Urdu storytelling must be aided and not be made more cumbersome. An application is, therefore, a beneficial medium, which may be used on devices such as phones and tablets.

How would the app work?

Much like Siri, Alexa and Google Home, the app will involve technologies such as speech recognition and Natural Language Processing. Simply put, once activated, the app would allow the storyteller’s speech to be converted into textual form. It would then recognize keywords and extract corresponding imagery from its inbuilt library. After this, the imagery would be animated based on action words that are spoken during storytelling. If you’re thinking this sounds fairly simple, there’s a lot of back-end processes that you and I, both, are unaware of. If you’re thinking, to produce satisfactory results, there is a lot that needs to be resolved, you are absolutely right.

Where will the imagery come from?

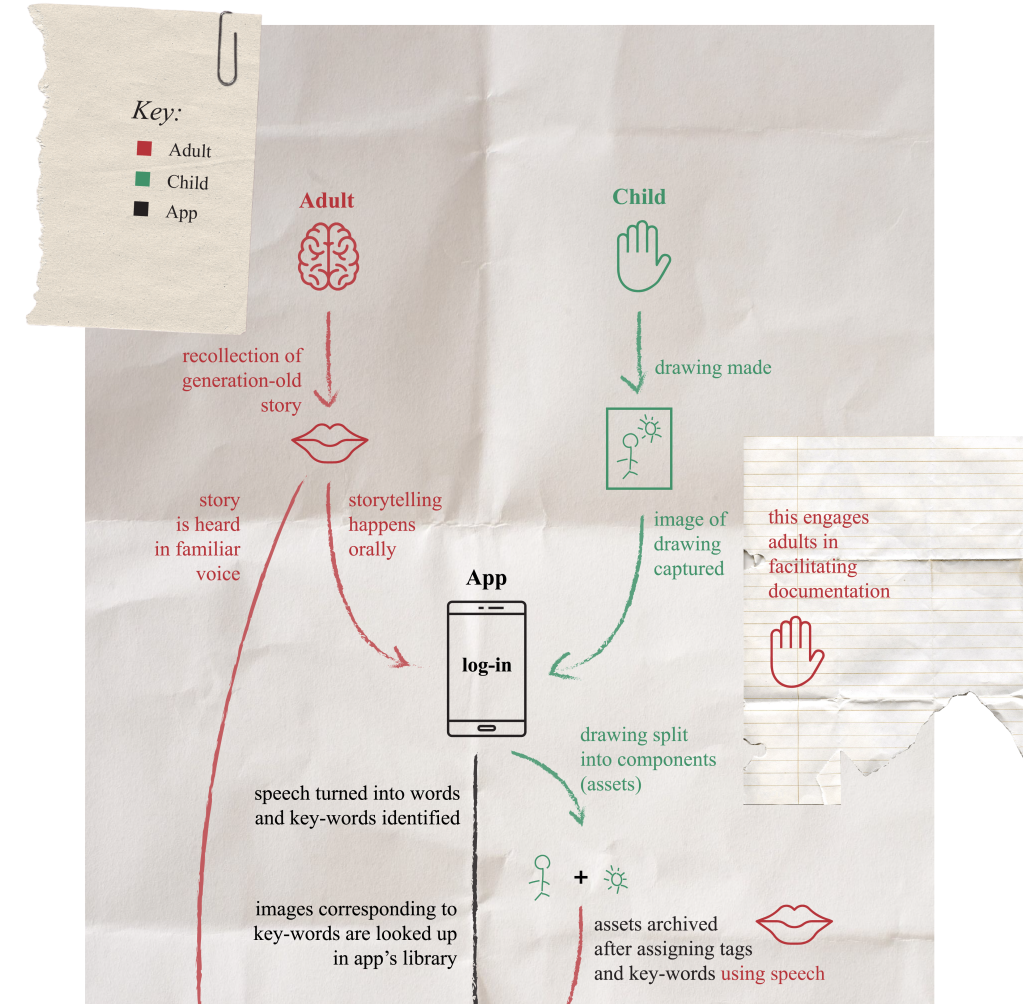

Here’s the fun bit! Much like the emoticon libraries we have on our phones, the app will come with a default set of hand-drawn images (assets) produced by children from ages 4-10. According to Viktor Lowenfeld’s stages of artistic development, these are the preschematic (4-7) and schematic (6-11) stages. Imagery will range from spaces (such as bedrooms, forests, beaches, etc.) to inanimate objects (such as trees, cars, spoons, etc.) as well as moving beings (such as children, dogs, insects, etc.). In addition to these, teachers and parents can take pictures of drawings made by their children, and generate an extensive personal library within the app. These drawings can be given multiple tags so that, upon using corresponding key-words, a child can view his/her own creation come to life as part of a story!

– This stage is when circular shapes begin to appear, with lines to help them look like figures.

– The understanding of space hasn’t developed yet, therefore objects are placed in a haphazard way.

– The use of color is more emotional than logical.

https://www.d.umn.edu/artedu/Lowenf.html

– This stage is recognized by the demonstration of space awareness.

– Definite base-lines and sky-lines become apparent and all components are spatially related

– Colors are reflected as they appear in nature.

https://www.d.umn.edu/artedu/Lowenf.html

Why use children’s drawings when we can have professional illustrations?

This is where a few combined theories come into play. According to the Reggio Emilia approach, a child possesses strength, competence, and potential. They are viewed as protagonists, occupying the primary active role in their education and learning. A child is understood as having an innate desire to discover, learn, and make sense of the world. One of the first things children do, as they learn how to pick up tools, is picking up a pencil and engage in mark-making. According to the Early Childhood Education Journal, Vol. 29, No. 2, Winter 2001, Loris Malaguzzi (1994) summed up this idea when he eloquently described children as being “authors of their own learning”.

The Reggio approach also views a child as a researcher, and the environment as an educator. While engaging in tasks, children explore, observe, discuss, hypothesize and represent. Their representations, in the form of drawings they construct, are potent material, that have far more potential to enhance authentic and long-lasting learning; something perfectly generated representations of the world (professional illustrations) cannot do. In this case, they would be learning how to engage in listening aided by their own drawings.

An app of this sort would indirectly encourage children to engage in producing more representations of their surroundings, which would then cause their families to participate as facilitators in taking pictures of their drawings and adding them to the app library. Subsequently, their drawings get documented and archived.

Getting into the nitty-gritty…

The whys and whats end here! Moving forward, based on very limited knowledge and a whimsical approach, I will solely be talking about how I think this application would work. I am not, by any means, a user-experience or user-interface designer, or even an app developer. Also, there are some very real aspects of AI technology that I’m completely ignoring here. What keeps me motivated is watching technology like Siri grow. If Google Home today can recognize and respond to up to four languages interchangeably, it’s only a matter of time before Urdu becomes part of that list.

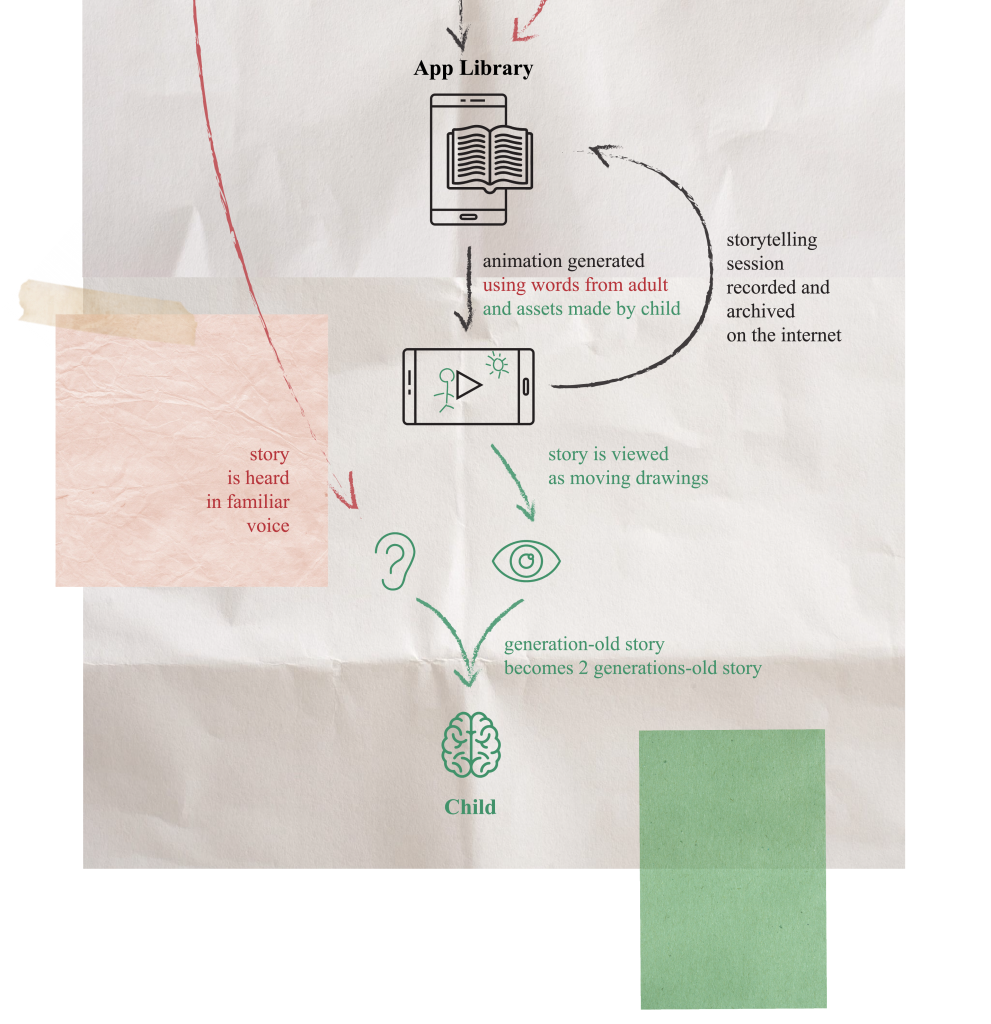

Below, is another diagram (excuse the slit in between) of how I expect adults and children would interact with the app.

The diagram above can be a little confusing due to its non-linear and partially looped nature. But, after looking at it closely, I was able to break the process down into some major goals that I recognized would be achieved. Below, is a simpler, more deconstructed version, of what you saw above.

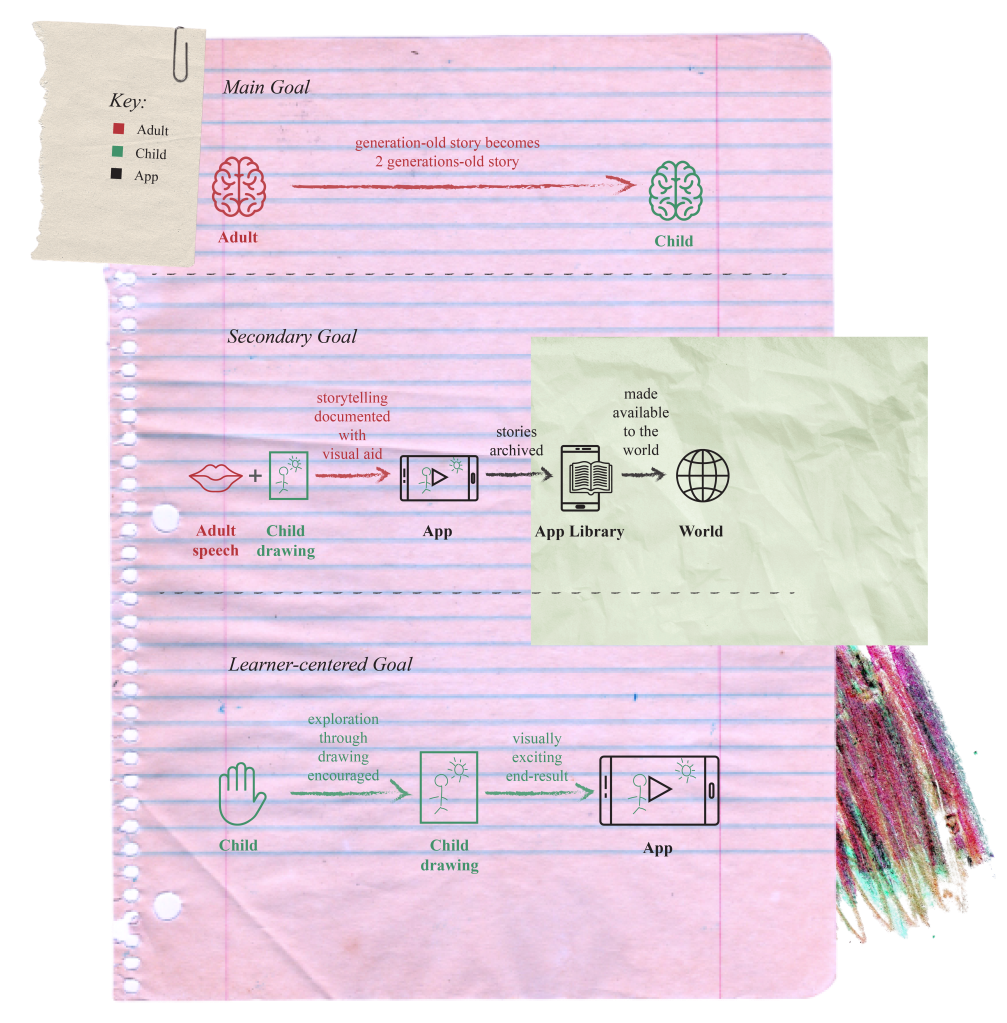

MAIN GOAL:

This comes from the very first question posed at the beginning of this blog, Is there a story that was originated in Urdu language and has stood the test of time? This goal is more intimate in nature because it stems from the urge to habituate storytelling in Urdu.

SECONDARY GOAL:

Although called the secondary goal, this aids the main goal by preserving Urdu stories told orally and making them available to the entire globe; in other words documentation, archival, and availability!

LEARNER-CENTERED GOAL:

This goal would be achieved due to the nature of the app. This goal also takes into account the inclination and excitement towards viewing one’s own drawings in motion. As more stories are recalled, parents would further encourage their children to visually represent characters and environments that perhaps would not exist in the default asset library that comes with the application, thus aiding the child’s exploratory learning and drawing skills.

Capturing and animating drawings…

Aside from the AI aspects, this is the toughest one to pull off.

Firstly, this is because child-made drawings consist of components that aren’t always confined to closed-off shapes. Secondly, drawings are stationary and making them move in a manner that is meaningful, requires deconstructing a drawing down to simple parts, so each of them can move independently.

Here’s an example:

1. Capturing the drawing

I instructed a 3-year-old (3 years and 7 months to be specific) to draw something from her surroundings. Here is the outcome.

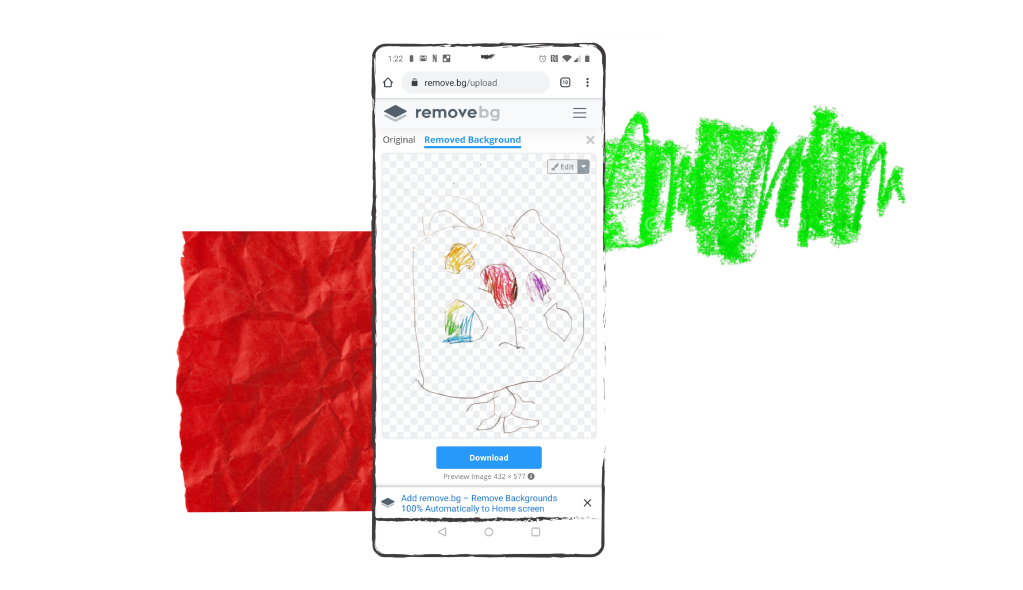

Out of the images above, one was chosen (bhaalu) and an image of it captured from a phone camera. The drawn figure needs to be separated from its background to achieve an asset that could be saved into the app’s asset library.

2. Isolating components within the drawing

How easy is it for technology to recognize figures and differentiate them from each other and the background? A good example would be the website remove.bg. Here’s the result I got when I subjected this drawing to the free online background removal process.

Remarkably, the result was quite successful! This is the evidence I required to be able to move forward. Below is a rough idea of how the app would allow its user to select parts of a child’s drawing, and remove them from their surroundings. Upon creating a fluid selection by a single unbroken finger movement, only the figure within will turn into one usable asset.

3. Giving asset a ‘type‘

After the asset is isolated from its surroundings, the user would be asked to place it in one of three categories:

1. Space

2. Object

3. Being

This figure clearly goes into ‘being’.

4. Tagging asset with keywords

After categorizing the asset into its type, it must be tagged with key-words. This is a very important step. If an asset is not tagged correctly, it will not appear during storytimes. The tagging process in the app would take place through speech. This way the AI technology is offered an opportunity to learn the Urdu accent of a potential storyteller. Down below is a video of me tagging this asset with my voice.

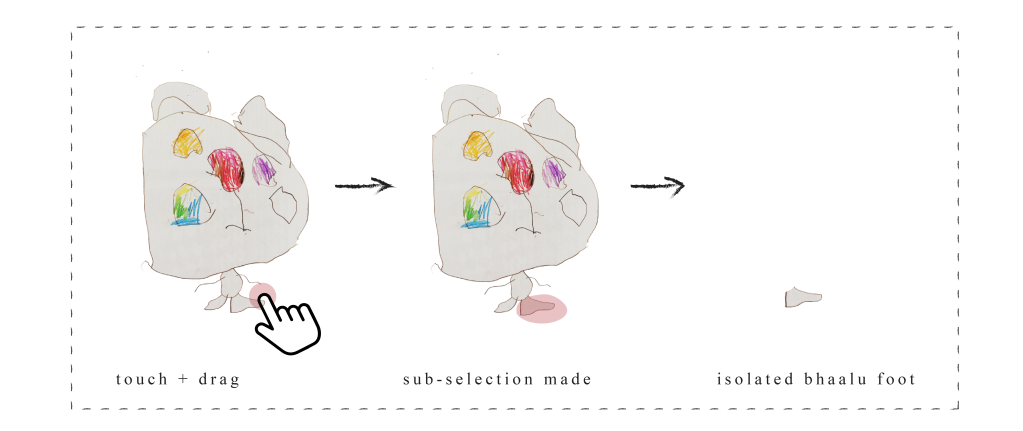

5. Sub-selecting parts of asset and giving them keywords

After the main asset has been tagged with the right keywords it could be sub-selected to define different anatomical parts! This is optional of course. Appointing keywords to parts of assets would allow the software to better animate the asset when need be. Here’s an example of sub-selecting an asset:

6. Watch Bhaalu come to life!

Something I noticed…

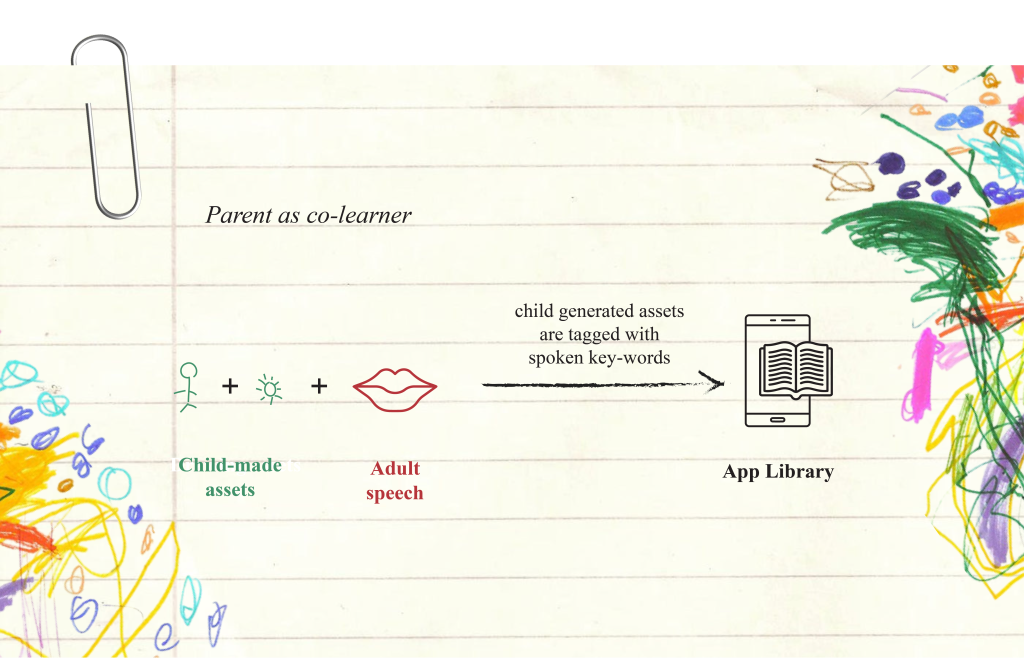

During the selection of keywords for different assets, adults inevitably become co-learners and collaborators with children, Hence they unconsciously partake in the Reggio Emilia approach. To decipher children’s drawings, investigative techniques would be applied by adults in order to better understand a child’s motivations and decisions. For example, a parent might want to confirm if a certain part of a figure, they assume is an arm, is really an arm. Therefore, during the tagging-by-speech process, an adult and a child would be brought together to work as a team.

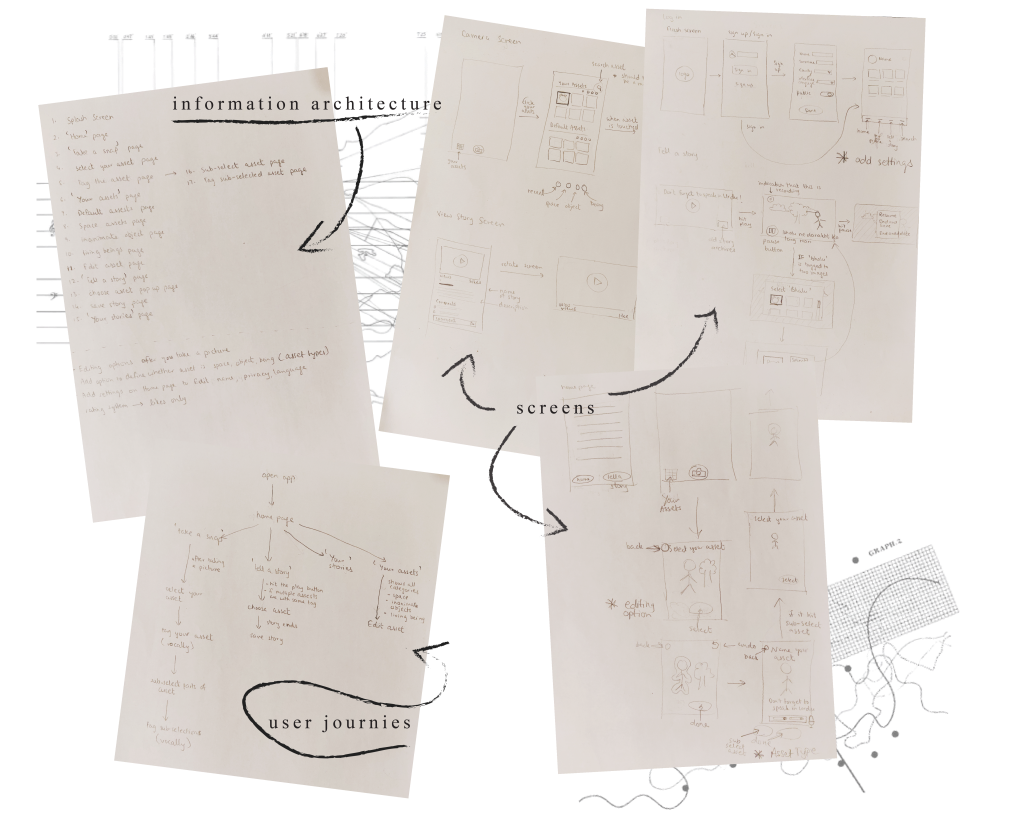

The app’s experience

Now once again, I’m no UI/UX designer, but I put myself to the task of generating the Information Architecture, User Flow, and a few screens of this potential app idea. Because this app would involve a high level of user input, for it to work correctly and smoothly, it was vital that I give the experience and the interface a go.

User-friendly fun features…

Keeping the users and benefitters in mind, the app would have the following features:

1. Option to change the interface language

Although the app is intended for Urdu storytelling, the interface has interchangeable language options. Meaning, the stories can be recited in Urdu while the interface remains in English or Urdu, whichever the user pleases.

2. Tagging assets with speech

This one’s been mentioned before. Instead of typing in tags and/or keywords, you have the option to ‘say’ them into your phone.

3. Image editing of the drawing

Not everyone is around the best lighting or has the ability to take a perfectly straight photograph. For this reason, the app would have built-in editing features to help you achieve the best quality asset.

4. Same tag, different outcome

In a case where the same tag is given to multiple assets (for example the asset library has 3 assets tagged ‘sooraj’), the app allows the user to choose which one they would like to use for a particular story.

5. Colour your imagination

Assets, whether they’re default or personal, would have the ability to change colour while the story is being told! For example, if a ‘baadal’ asset is originally white, and the story requires them to be pink, you just have to say so, LITERALLY.

App wireframes

Using basic software like Balsamiq Wireframe and InVision, I generated a wireframe-prototype for you to experience the potential app first hand! Follow the link below

Due to the ever-evolving and vast nature of technology, I couldn’t help but apply the best I knew to a single app. I was asked questions by concerned individuals, parents, developers, and designers such as:

“You want this to happen it real-time!?“

“Did you take into account the processing time AI takes before it can show you a result?“

“Urdu is spoken in so many dialects and accents, which one will this app work on?“

“Do you know how heavy this application would be?“

“How would you animate adjectives like ‘glorious‘?”

To all these questions, I say, all in due time. We could not have imagined a world where switching off lights could be done with a single command, and yet it has been made possible. All we have to do is allow ourselves to succumb to our imagination and let the whimsy side take over.